The following work term report, titled “Integrating Vulnerability Scanning for Docker Containers into Cloud Systems”, has been prepared regarding my work at Wind River Systems during my 2A co-op term.

This report compares three different vulnerability scanning tools in terms of performance and future integrations, for security scanning roadblocks which arose from containerizing cloud infrastructure software.

about StarlingX: a cloud infrastructure software

For a bit of background information, Wind River Systems’ Titanium Cloud, a virtualization platform, prides in its low latency, security and optimized “six-nine” availability1—”six-nine” meaning an uptime of 99.9999% in a year. Recently, the core functionality of Titanium Cloud was open-sourced as StarlingX2, which is now in the transition process from bare-metal virtual machines to incorporate another layer of abstraction using Docker containers storing OpenStack services managed by Kubernetes.

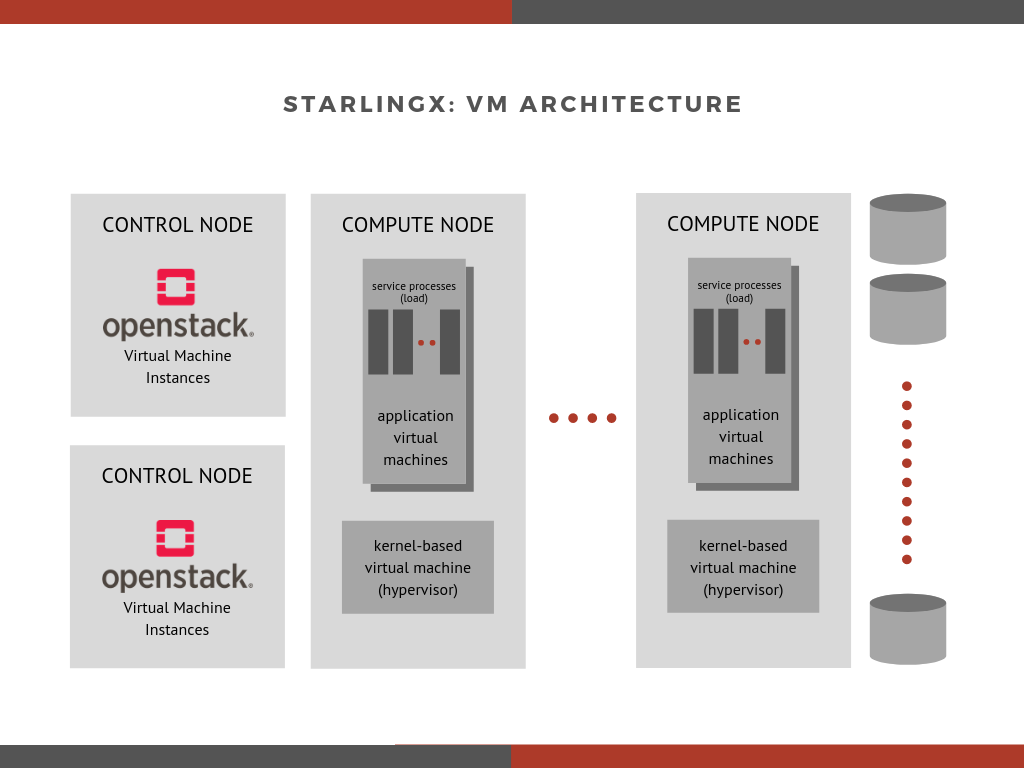

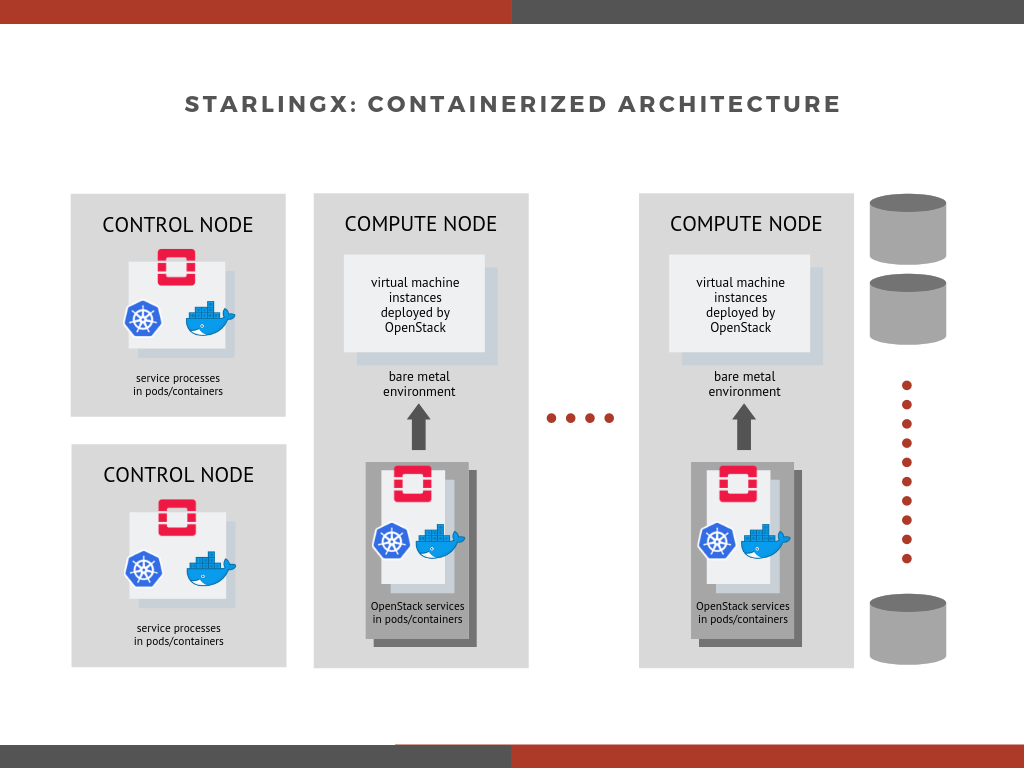

The two figures below demonstrate the difference between the legacy virtual machine usage to the newly Dockerized architecture3.

figure 1: simplified illustration of the deployment architecture of StarlingX, with virtual machines and OpenStack on bare-metal.

figure 2: post-cutover, simplified illustration of the deployment architecture of StarlingX, with containerized OpenStack services managed by Kubernetes.

why a security scanning tool?

For StarlingX, performing security and vulnerability analysis is indispensable to maintaining high reliability as a cloud system. But to maintain rigorous “six-nine” uptime, it’s unfeasible to regularly poll for available vulnerability fixes through the operating system’s package manager.

Not only does polling bring in unnecessary resource consumption, but as the number of cloud server nodes increase, it also becomes proportionally more difficult and expensive to maintain similar standards of frequent vulnerability analysis across the cloud server.

The need of a scanner incorporated into StarlingX exists not only as unified risk monitoring tool for the developers, but as an easily manageable scanning service to be run as a cron job for the clients’ own deployments.

the scanning problem with the cut-over to containers

With the transition to containerized services, updating security scanning functionality rises in priority: since the core software now lies in a different architecture, the need for changes in vulnerability scanning becomes more pronounced.

Fortunately, the usage of Docker containers opens up a lot of doors in term of available tools. From a security scanning standpoint, the transition from virtual machines to containers implies less emphasis on kernel vulnerabilities through framework isolation, and more freedom to peruse and scan through custom StarlingX packages instead. Since Docker containers consist of layers with packages deployed through Dockerfiles, a tree-like structure of dependencies can traversed and obtained. However, as opposed to the legacy virtual machine architecture, packages can now be located within any image layer.

Hence, the search of a more suitable vulnerability scanner starts.

decision evaluation criteria: context, requirements and constraints

To begin, StarlingX Docker images are based on a partially stripped-down version of CentOS 7.6, with only the bare dependency requirements. Thus, the performance of vulnerability scanners will be evaluated in terms of their results for CentOS:7.6.1810 instead of an overall performance on various images. Due to this single-purpose use, either excellent result overfitting to CentOS images, or customization flexibility with the source code would be highly desirable.

Secondly, the location of integration for vulnerability scanning has yet to be decided, but there are two specific directions: either located on the user’s side, deployed as an add-on service as part of the StarlingX project, or on the developer’s side as a tool integrated into the workflow. Since the nature of this vulnerability scanner service has yet to be set, flexibility in terms of the deployment is a critical constraint.

Hence depending on its future usage, the scheduling frequency of the scan job would sway the need for quick scan times: weekly or monthly cron jobs on the client’s side wouldn’t have significant scan time requirements as opposed to immediate scans after every developer’s change. Additionally, if deployed as part of a tech workflow or pipeline, more importance would also be placed on the latency between machines, as well as the time it takes to complete a full scan. However, since the usage of the tool has yet to be determined, the overall scan time and the time it takes to update the database will be used as the determining criteria.

Finally, the scanner’s additional resource consumption should be noted: either computational or memory-wise.

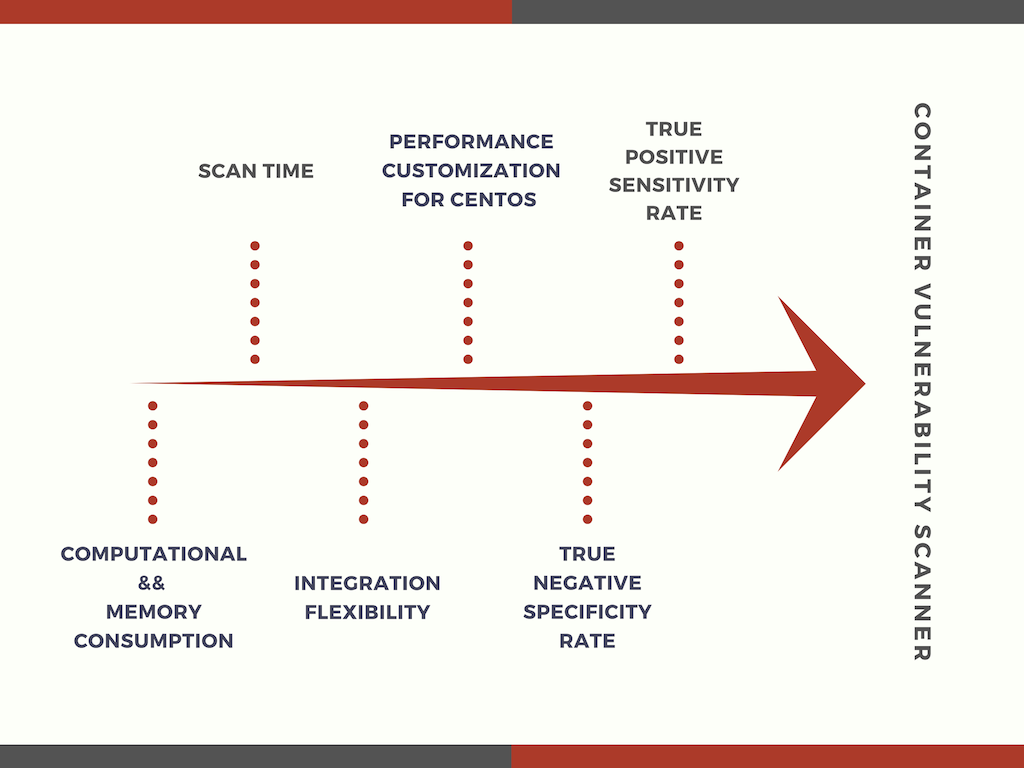

Nonetheless, whether the vulnerability scanner serves as a service for the customer, or a developer tool as part of a pipeline, the evaluation of the candidate tools will be first and foremost judged by its either existing or potential performance: measured in terms of true positive accuracy rate (the sensitivity of the scanner), and a more global measure of accuracy taking the relative specificity into account.

The true positive accuracy rate is a measure that demonstrates the competency of the scanner in terms of how sensitive it is towards detecting correct vulnerabilities.

More formally:

Sensitivity=TPTP+FNwhere TP represents the true positives found by the scanner—the vulnerabilities that were indeed present in the Docker image—and FN represents the avoided false negatives—the vulnerabilities that truly weren’t present in the system. Obtaining a high sensitivity rate means that vulnerabilities that are found by the scanner are more likely to truly be present.

It’s also to be noted that the quantification of true positive results is under the assumption that the union of all vulnerabilities found by all three scanners is the complete set of all the vulnerabilities. Thus, this set would not include the unfound—but present—vulnerabilities. In reality, the true number of vulnerabilities isn’t feasibly obtained, and hence the perceived measure of sensitivity would be all globally shifted lower.

This same logic also assumes that the only true negatives are the ones that were correctly avoided in some, but not all scanners. In other words, the union of the sets of scan results from the three compared scanners only, not the “universal” set of all vulnerabilities avoided.

As for the specificity, or false negative rate, this is a measure of how many vulnerabilities are correctly identified as not present in the Docker image. If a high specificity rate is present, then false positives are minimized, but perhaps at the expense of minimizing the number of true positive results.

Specificity=TNTN+FPSince the complete set of true negatives isn’t feasibly obtained, it would be difficult to obtain a reasonable measure of specificity.

However, both the sensitivity and the specificity are important to determine the performance of the vulnerability scanner. Hence, by using the overall accuracy rate as a metric of comparison, it combines both true positives and false negatives from the sensitivity, as well as true relative negatives and false positives from the specificity. In terms of performance, the better vulnerability scanner should be both sensitive and specific—rarely overlook vulnerabilities that are present, and rarely mistakenly report vulnerabilities that aren’t present.

Formally, the accuracy is calculated as:

Accuracy=TP+TNTP+FN+TN+FPusing the same assumed set of true negatives obtained from a merge of all the scan results, similarly to the assumption made for sensitivity.

The decision criteria are summarized in the following figure (1), ordered in terms of importance from right to left along the arrow.

figure 3: ranking of the decision criteria in order of importance

vulnerability scanner candidates

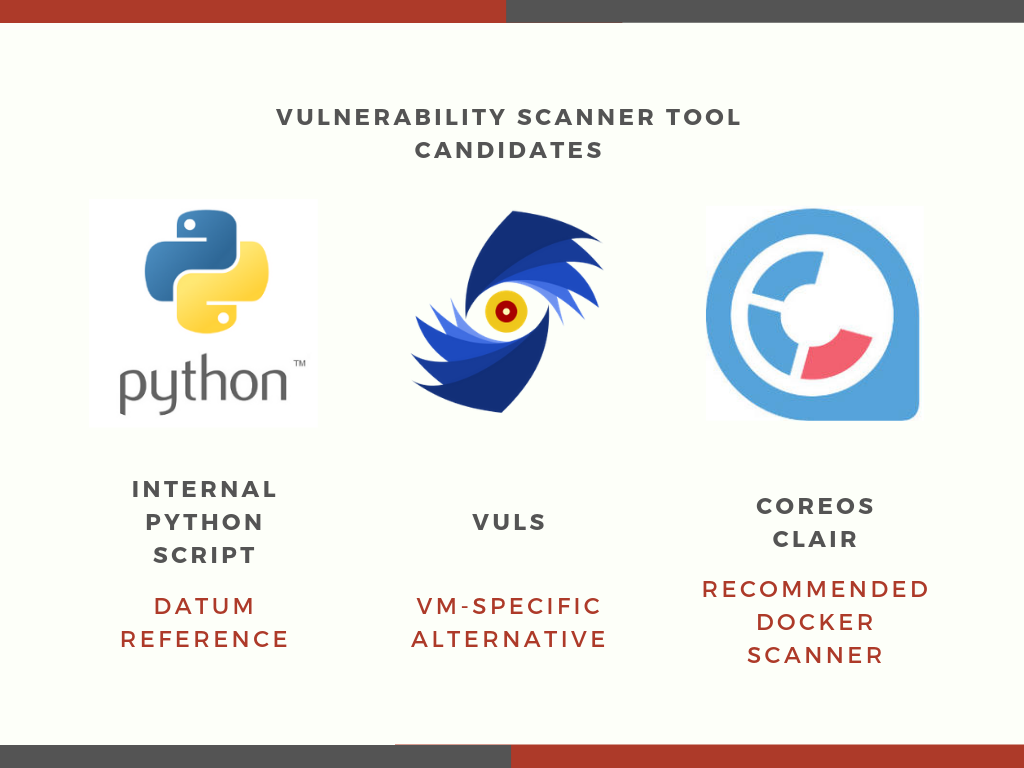

Three vulnerability scanner tools are compared: an internal Python tool, Vuls, as well as Clair.

The following is an exploration of their features and drawbacks, followed by a summary chart with respect to the aforementioned decision criteria.

option 1

The vulnerability scanner status quo tool is an internal Python script that scrapes through the NIST national vulnerability database4 along with the RedHat security API database5, and both cross-checked with the CentOS-Announce6 list. The scanner then queries the Docker image’s program packages through the master database of vulnerabilities. These queries match for matching package meta data, such as the architecture and version information that would indicate the presence of security vulnerabilities. In brief, static scanning.

Scans using this tool were performed once a month, in line with the CentOS-Announce’s list of CentOS Errata and Security Advisories (CESA), a monthly community-maintained list of CentOS-specific vulnerabilities cherry-picked from RedHat’s erratas.

It’s reasonable to assume that the more sources referred by the vulnerability scanner, the better the performance. In this case, this internal Python tool refers to 3 different vulnerability databases, which covers most vulnerabilities documentations related to the RedHat-based CentOS operating system.

However, scan results also depend on the nature of the sources: whether it contains vulnerabilities that have been resolved, or even in terms of how often these sources are updated, or even the reliability of the vulnerability data itself. For example, because the CentOS-Announce list is based off of RedHat’s erratas, it implies that the CESAs from this list refer to security vulnerabilities with fixes only.

As a result, contrary to what the number of sources suggest, less than 15% of the vulnerabilities detected are truly present in the target environment scanned, according an analysis of the scan results performed by the StarlingX security team. This indicates a very low sensitivity rate, or in other words, poor performance shown by a low true positive accuracy rate.

But nonetheless, the internal Python script is a relatively lightweight tool, easily portable and configurable with little dependency requirements. Along with the fact that the StarlingX stack includes Python, integrating the Python scanning tool natively in the StarlingX project would not introduce additional unrelated vulnerabilities from its dependencies.

option 2

Similar to the current solution, Vuls7 is a current experimental tool, transitioning away from the Python status quo tool in a playground environment. It’s been tested on a similar CentOS virtual machine instance—release 7.6.1810—and does a good job at detecting kernel package vulnerabilities. However, factoring in the cut-over to a more containerized project, the ability to detect kernel vulnerabilities is neither easily feasible through the new Docker containers, nor is heavily required for these scan purposes. The reason for the latter is due to the fact that even though these vulnerabilities can be detected on the client side, the StarlingX cloud infrastructure wouldn’t be able to provide with any corrective patches on the kernel itself.

As an agent-less scanner tool, Vuls is able to perform dynamic vulnerability scanning with root access, as well as both native and remote scans through ssh. On one hand, dynamic scanning leads to more rigorous security verification, but on the other hand, is a resource-heavy and relatively more intrusive scan technique compared to static scans. To compensate, Vuls provides with the option to quickly scan non-intrusively as well, minimizing the load of the cloud server.

As another performance advantage, Vuls is an OS-aware scanning tool. In terms of relevant vulnerability databases, not only does Vuls refer to the list of CVEs provided by NIST like the internal Python tool, but it also includes RedHat’s list of Open Vulnerability and Assessment Language (OVAL)8 as well as the RedHat Security Advisory database of erratas. The OVAL list is maintained by a third party: the MITRE Corporation9, which standardizes and promotes publicly available information about security. Hence OVALs are more trustworthy in terms of evaluating and consistently comparing vulnerabilities across different operating systems. Finally, Vuls also refers to the list of Common Platform Enumeration (CPE). Although it’s non-RedHat nor CentOS related, it provides with vulnerability information related to middleware and programming language libraries, which would be useful for StarlingX’s custom program packages and infrastructure scanning.

option 3

Taking into account of the different decision requirements and criteria, Clair by CoreOS is a proposed replacement for the aforementioned tools.

Clair is an open-source static vulnerability scanning tool specifically for Docker containers. Its vulnerability databases are periodically updated from NIST, along with the RedHat’s Security Advisory database.

One significant difference between Clair and its other scanning counterparts is that Clair in itself is simply a back-end service, although many third party integrations already exist. Notably, Harbor, an open-source Docker Registry framework has Clair’s container scanning pre-integrated, which would make Clair simple to set up for users as well.

As a scanner back-end service, Clair provides with a REST API interface for developers to tinker with, along with its source code on GitHub, which makes it more accessible to be customized for better performance. Hence, despite its limited vulnerability databases relative to Vuls and the internal Python tool, Clair has great potential to be fully customized to scan StarlingX’s CentOS images.

But in all, Clair’s greatest advantage lies in its ability to statically traverse and query through specific Docker image layers, which is highly desirable for the purpose of scanning widespread, containerized architectures, without having to be installed natively. Hence, although Clair’s dependencies themselves do not overlap with those within StarlingX, it’s not necessary for the Clair tool to be locally installed on the cloud servers to perform vulnerability scans.

summary of vulnerability scanner candidates

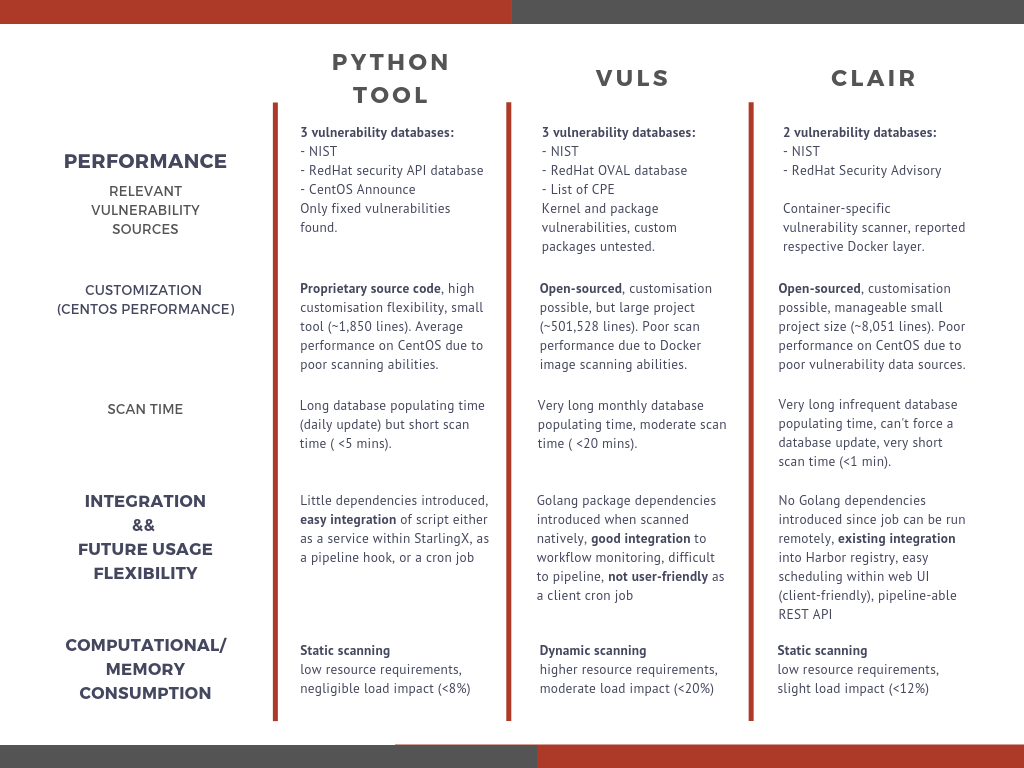

Below is a chart of the relevant features and drawbacks of each scanning tool, organized in terms of the aforementioned decision criteria.

The size of each project was approximated using the Linux wc command, such as find . -name '*.go' | xargs wc -l, as well as using the GitHub Chrome Extension tool “GLOC”.

The scan times were approximated on a playground environment launched using individual OpenStack Xenial instances of the same resource sizes (custom flavour of 4 CPU, 16 GB memory, 50 GB storage) on the same internal network.

Finally, the load impacts were obtained with the top command, watching for an approximate highest CPU usage percentage.

the decision: pitting the scanners against each other

As performance was the most important criteria, metrics of accuracy are needed. Thus, a comparison of the scan results was performed.

The internal Python tool identified 51 vulnerabilities, where 28 vulnerabilities were false positives, and a handful of others were cherry-picked out due to not having existing fixes or patches.

Vuls identified 16 vulnerabilities, where only 6 of them were relevant ones. Many false positives were introduced from assumed kernel vulnerabilities, or other packages unrelated to StarlingX. The reason for the latter is due to the fact that the Vuls scan was performed natively. Hence, Vuls itself introduced many new packages, for instance: openssl and its vulnerabilities weren’t present in StarlingX, and were actually introduced by installing Vuls. Moreover, technical challenges and security concerns were raised about deploying Vuls to scan remotely, and the setup difficulty impeded with readily distributing this tool for clients to use easily.

From this standpoint, it seems unlikely to be able to improve on Vuls’ accuracy, despite its many promising vulnerability data sources.

Finally, Clair scanned out 7 RedHat Security Advisories, which translates to around 30 vulnerabilities, where all 7 where true positives, all with fixes announced in Erratas for later package versions. Although accurate in terms of true positive rate, this performed weakly relative to the number of true positives found by the current internal Python tool.

Implicitly, these results show that both the Python tool and the Vuls scanner have low sensitivity, whereas Clair has unreasonably high sensitivity and specificity.

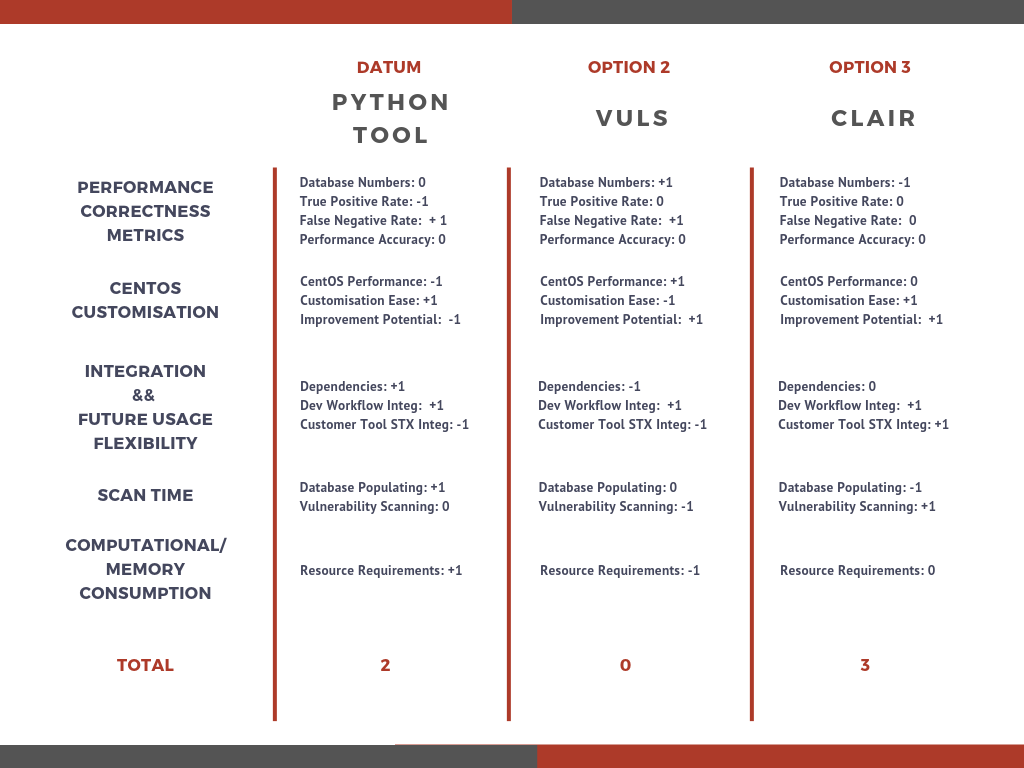

Aside from the performance, a summary of the weighings of each decision criteria per scanner candidate can be represented as a decision matrix as shown:

It can be shown quantitatively that due to the nature of the Clair scanner, it would be a better choice overall. However, it does lose out to Vuls and the internal Python tool in the following aspect: database sources, and hence, performance. But as database sources is an aspect that can be improved upon, Clair holds high potential as a vulnerability scanning tool for StarlingX, since Clair’s scanning abilities are more suitable to the containerized architecture.

Now, the challenge following this decision, is to bring Clair’s performance up to speed, referencing Vuls’ and the Python tool’s vulnerability databases.

acting on the decision: improvements on Clair

Now that the decision has been made, certain improvements related to the performance of Clair can be made to obtain the best possible results. In terms of vulnerability sources, as long as more entries are populated to the vulnerability database, Clair’s scanner could query through Docker images and detect them. Hence, two more CentOS-related databases were introduced: the CentOS-Announce list, as well as the RedHat Security API database, which contains a open list of existing issues and vulnerabilities, similar to what is used in the internal Python tool.

With these different databases, on top of some more Docker meta data parsing and verifications, the output was converted to a third party, OS-independent standard to facilitate the final validation of the scan improvements.

With these changes in vulnerability databases follows the verification of the changes.

validation of Clair’s improvements from v2.0.7

All in all, it was found that out of 57 total results as a union of all scans of CentOS 7.6.1810, the latest Clair release (v2.0.7 at the time) had a:

- 1 true positive amount of 7

- 2 false positive amount of 0

- 3 true negative relative estimate of 4

- 4 false negative relative amount of 37

which resulted in a true positive accuracy rate of 15.9%, and an overall accuracy rate of 35.1%.

Meanwhile, with the new data source improvements, this customized version of the Clair scanner resulted in a:

- 1 true positive amount of 43

- 2 false positive amount of 6

- 3 true negative relative estimate of 3

- 4 false negative relative amount of 5

which resulted in a true positive accuracy rate of 97.7%, and an overall accuracy rate of 80.7%.

Below is a tabulated list of the scan results as a side-by-side between v2.0.7 and the customized version of Clair around CentOS images specifically.

| CVE | CoreOS Clair v2.0.7 release | Improved CentOS Driver based on Clair v2.0.7 | Package | Image’s rpm Version | Affected Versions (mitre) | Fixed Version | Verdict |

|---|---|---|---|---|---|---|---|

| CVE-2018-1000876 | :( | y | binutils | 2.27-34 | <2.32 | n/a | affected with RHEL 7 |

| CVE-2018-1113 | setup | 2.8.71-10 | 2.8.71-10 | resolved in centos:7.6.1810 image | |||

| CVE-2018-12404 | y | y | nss | 3.36.0-7.el7_5 | 3.36.6 | affected with RHEL 7 | |

| CVE-2018-14618 | y | libcurl | 7.29.0-51 | >7.15.4 | 7.61.1 | affected with RHEL 7, 32 bit systems only | |

| CVE-2018-14647 | y | y | python | 2.7.5 | 2.7.0 | 2.7.16 | affected with RHEL 7 |

| CVE-2018-15688 | y | y | systemd-networkd | 219-62.el7 | 219-62.el7_6.2 | affected with RHEL 7, but does not ship systemd-networkd by default | |

| CVE-2018-16428 | y | :( | glib2 | 2.54.2 | 2.56.1 | n/a | affected with RHEL 7 |

| CVE-2018-16429 | y | :( | glib2 | 2.54.2 | 2.56.1 | n/a | affected with RHEL 7 |

| CVE-2018-16842 | y | y | curl | 7.29.0-46 | 7.14.1 | 7.62.0 | affected with RHEL 7 |

| CVE-2018-16864 | y | y | systemd-journald | 219-62.el7 | v203 | v241 | affected with RHEL 7 |

| CVE-2018-16865 | y | y | systemd-journald | 219-62.el7 | v201 | v241 | affected with RHEL 7 |

| CVE-2018-16866 | :( | y | systemd-journald | 219-62.el7 | v221 | v242 | affected with RHEL 7, change was backported to v219 |

| CVE-2018-17358 | y | y | binutils | 2.27-34 | ~2.31 | n/a | CVE under investigation |

| CVE-2018-17359 | y | y | binutils | 2.27-34 | ~2.31 | n/a | CVE under investigation |

| CVE-2018-17360 | y | y | binutils | 2.27-34 | ~2.31 | n/a | CVE under investigation |

| CVE-2018-17794 | y | y | binutils | 2.27-34 | ~2.31 | n/a | affected with RHEL 7 |

| CVE-2018-17985 | y | y | binutils | 2.27-34 | ~2.31 | n/a | affected with RHEL 7 |

| CVE-2018-18484 | y | y | binutils | 2.27-34 | ~2.31 | n/a | affected with RHEL 7, will not fix state |

| CVE-2018-18508 | y | y | nss | 3.36.0-7.el7_5 | 3.41.1 | affected with RHEL 7, will not fix state | |

| CVE-2018-18605 | y | y | binutils | 2.27-34 | ~2.31 | n/a | affected with RHEL 7, will not fix state |

| CVE-2018-18606 | y | y | binutils | 2.27-34 | ~2.31 | n/a | affected with RHEL 7, will not fix state |

| CVE-2018-18607 | y | y | binutils | 2.27-34 | ~2.31 | n/a | affected with RHEL 7, will not fix state |

| CVE-2018-18700 | y | y | binutils | 2.27-34 | ~2.31 | n/a | affected with RHEL 7, will not fix state |

| CVE-2018-18701 | y | y | binutils | 2.27-34 | ~2.31 | n/a | affected with RHEL 7, will not fix state |

| CVE-2018-19211 | y | y | ncurses | 5.9-14.20130511 | 6.1 | n/a | affected with RHEL 7, will not fix state |

| CVE-2018-19217 | y | ncurses | 5.9-14.20130511 | 6.1 | n/a | affected with RHEL 7, will not fix state | |

| CVE-2018-19932 | y | y | binutils | 2.27-34 | ~2.31 | n/a | affected with RHEL 7 |

| CVE-2018-20002 | y | y | binutils | 2.27-34 | ~2.31 | n/a | affected with RHEL 7 |

| CVE-2018-20482 | y | y | tar | 1.26-35 | < 1.30 | 1.3 | affected with RHEL 7, will not fix state |

| CVE-2018-20483 | y | y | curl, wget | 7.29.0-51 | 7.29 | 7.63.0 | only curl affected with centos:7.6.1810, will not fix state |

| CVE-2018-20657 | y | y | binutils | 2.27-34 | 2.31.1 | n/a | affected with RHEL 7, will not fix state |

| CVE-2018-20673 | y | y | binutils | 2.27-34 | ~2.31 | n/a | affected with RHEL 7 |

| CVE-2018-5742 | y | :( | bind (bind-license) | 9.9.4-72 | 9.9.4-65 | 9.9.4-73 | affected with RHEL 7 |

| CVE-2019-3815 | y | y | systemd-journald | 219-62.el7 | V219-62 | 219-62.el7_6.3 | affected with RHEL 7 |

| CVE-2019-3855 | :( | y | libssh2 | 1.4.3-12 | <= 1.80 | 1.8.1 | affected with RHEL 7 |

| CVE-2019-3856 | :( | y | libssh2 | 1.4.3-12 | <= 1.80 | 1.8.1 | affected with RHEL 7 |

| CVE-2019-3857 | :( | y | libssh2 | 1.4.3-12 | <= 1.80 | 1.8.1 | affected with RHEL 7 |

| CVE-2019-3858 | :( | y | libssh2 | 1.4.3-12 | <= 1.80 | 1.8.1 | affected with RHEL 7 |

| CVE-2019-3859 | :( | y | libssh2 | 1.4.3-12 | <= 1.80 | 1.8.1 | affected with RHEL 7 |

| CVE-2019-3860 | :( | y | libssh2 | 1.4.3-12 | <= 1.80 | 1.8.1 | affected with RHEL 7 |

| CVE-2019-3861 | :( | y | libssh2 | 1.4.3-12 | <= 1.80 | 1.8.1 | affected with RHEL 7 |

| CVE-2019-3862 | :( | y | libssh2 | 1.4.3-12 | <= 1.80 | 1.8.1 | affected with RHEL 7 |

| CVE-2019-3863 | :( | y | libssh2 | 1.4.3-12 | <= 1.80 | 1.8.1 | affected with RHEL 7 |

| CVE-2019-5010 | :( | y | python | 2.7.5 | 2.7.11, 3.7.2 | n/a | affected with RHEL 7, but centos:7.6.1810 does not have related python version |

| CVE-2019-6454 | y | y | systemd | 219-62.el7 | < v239 | v241 | affected with RHEL 7 |

| CVE-2019-9072 | y | binutils | 2.27-34 | ~2.32 | n/a | not affected with RHEL 7 | |

| CVE-2019-9073 | y | binutils | 2.27-34 | ~2.32 | n/a | not affected with RHEL 7 | |

| CVE-2019-9074 | y | y | binutils | 2.27-34 | ~2.32 | n/a | affected with RHEL 7 |

| CVE-2019-9075 | y | y | binutils | 2.27-34 | ~2.32 | n/a | affected with RHEL 7 |

| CVE-2019-9076 | y | binutils | 2.27-34 | ~2.32 | n/a | not affected with RHEL 7, not a bug state | |

| CVE-2019-9077 | y | y | binutils | 2.27-34 | ~2.32 | n/a | affected with RHEL 7 |

| CVE-2019-9169 | y | y | glibc | 2.17-260 | ~2.29 | n/a | affected with RHEL 7 |

| CVE-2019-9192 | y | y | glibc | 2.17-260 | ~2.29 | n/a | under investigation |

| CVE-2019-9633 | y | y | glib2 | 2.56.1-2 | 2.59.2 | 2.59.2-1 | affected with RHEL 7, but should not be present with current glib2 version |

| CVE-2019-9636 | y | y | python | 2.7.5 | 2.7.0 | 2.7.16 | affected with RHEL 7 |

| CVE-2019-9923 | y | tar | 1.26-35 | 1.32 | affected with RHEL 7 | ||

| CVE-2019-9924 | y | bash | 4.2.46-31 | < 4.4 | n/a | affected with RHEL 7 |

conclusion

Different solutions are needed for different requirements: the nature of the vulnerability scanner should conform with the the nature of the infrastructure, as all things should be. That’s indeed the reason why we have such diverse solutions for even the more mundane technology problems. But even then, the most suitable solution is still unlikely to be ideal. In that case, there’s no harm in standing on the shoulders of giants when possible: it’s not only more efficient from a project’s perspective, but it’s also impactful in terms of open-source contributions.

It was a highly satisfying experience to improve on existing open-source tools not only for Wind River’s purposes, but for the greater public to use as well.

bibliography

-

1 Wind River Titanium Cloud. Wind River Systems. Retrieved from https://www.windriver.com/products/titanium-cloud/ ↩

-

2 StarlingX. Retrieved from https://www.starlingx.io/ ↩

-

3 StarlingX Overview. OpenStack. Retrieved from https://superuser.openstack.org/articles/starlingx-overview/ ↩

-

4 National Vulnerability Database. Information Technology Laboratory. Retrieved from https://nvd.nist.gov/ ↩

-

5 RedHat Security Data API Documentation. RedHat Customer Portal. Retrieved from https://access.redhat.com/documentation/en-us/red_hat_security_data_api/1.0/html/red_hat_security_data_api/index ↩

-

6 CentOS Announce. CentOS.org. Retrieved from https://lists.centos.org/mailman/listinfo/centos-announce ↩

-

7 Vuls Vulnerability Scanner. GitHub/future-architect. Retrieved from https://github.com/future-architect/vuls ↩

-

8 OVAL Definitions for RedHat Entreprise Linux. RedHat. Retrieved from https://www.redhat.com/security/data/oval/ ↩

-

9 Open Vulnerability and Assessment Language, A Community-Developed Language for Determining Vulnerability and Configuration Issues on Computer Systems. MITRE OVAL. Retrieved from http://oval.mitre.org/ ↩